I’m beyond excited to share a new project I’ve been working on for the last couple of months!

At various points in my career, I have flirted with the idea of creating a startup. During my last 2 years at Meta, especially, a big part of me wanted to be a product manager. But a couple of things stopped me. First, I never felt like any of my ideas were quite compelling enough for the time and energy they would take to pursue. Second, and just as importantly, I never had the space to explore and experiment with ideas that didn’t have a clear return on investment.

Well, both of these things finally happened: a great idea and the time and space to make it happen.

I found a problem

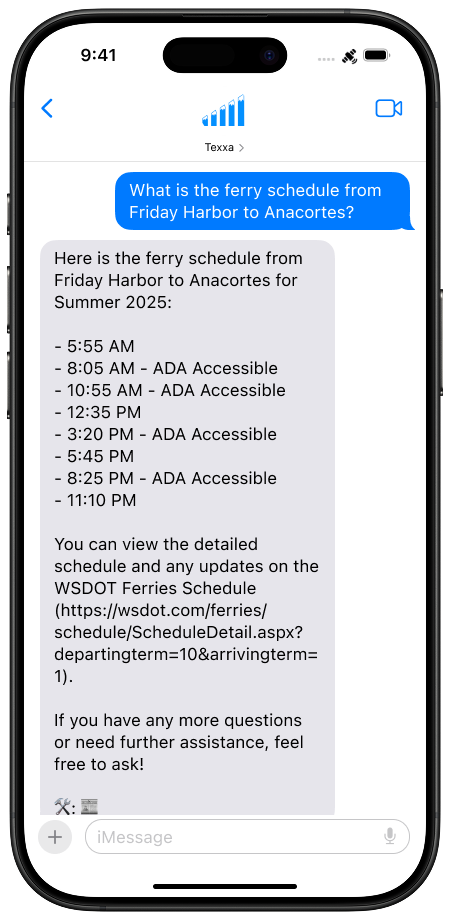

I was camping on San Juan Island with no reception and got to actually try Apple’s new-ish satellite messaging for the first time. I had been planning out our next day and wishing I could just look up the ferry schedule for our trip home to Seattle.

And then it clicked: why couldn’t I just send a text with my newfound satellite messaging superpowers to ChatGPT and have it look up the schedule for me?

This is a familiar pattern of something I have wanted for years when I was in the backcountry, on a plane (before ubiquitous in-flight Wi-Fi), or stuck on 2G, and needed to Google something to get a critical piece of information, or even just to sate my often-burning curiosity.

So I made something new!

It ends up you can’t just send a text to ChatGPT. So with the power of numerous AI agents, in under a week I prototyped my own AI agent.

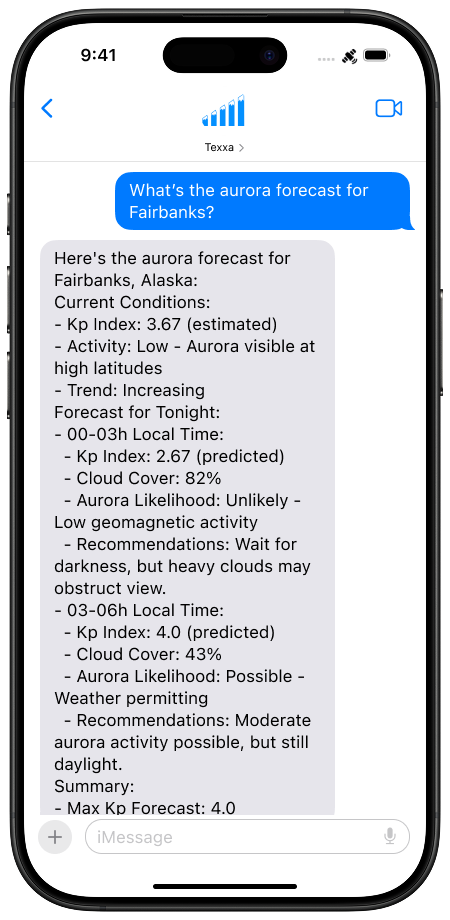

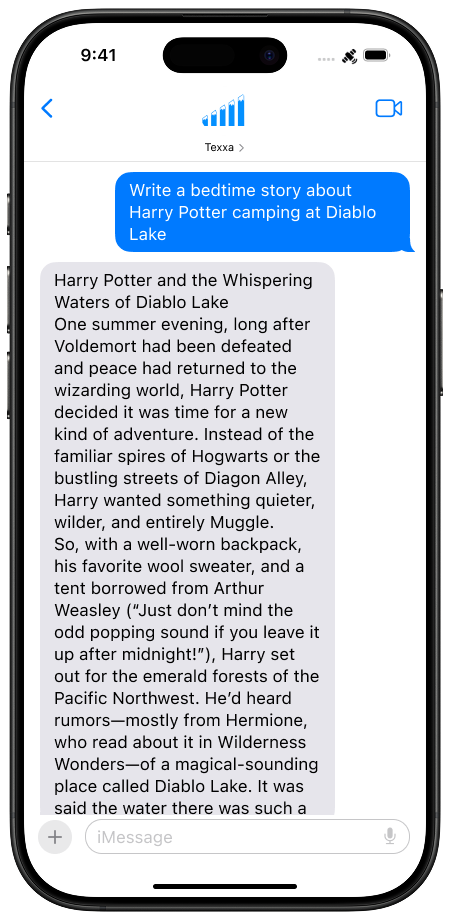

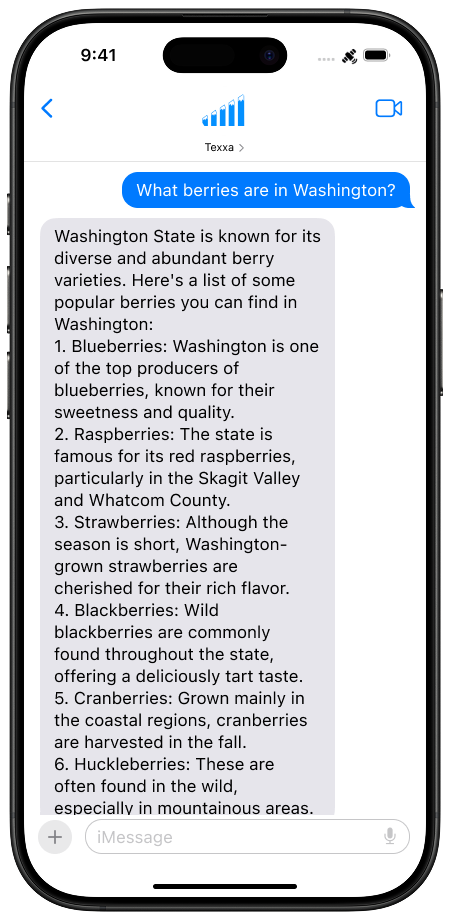

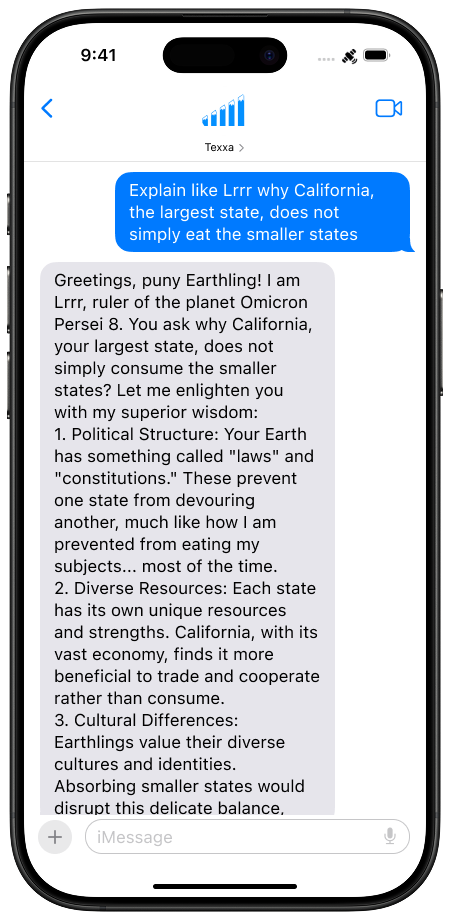

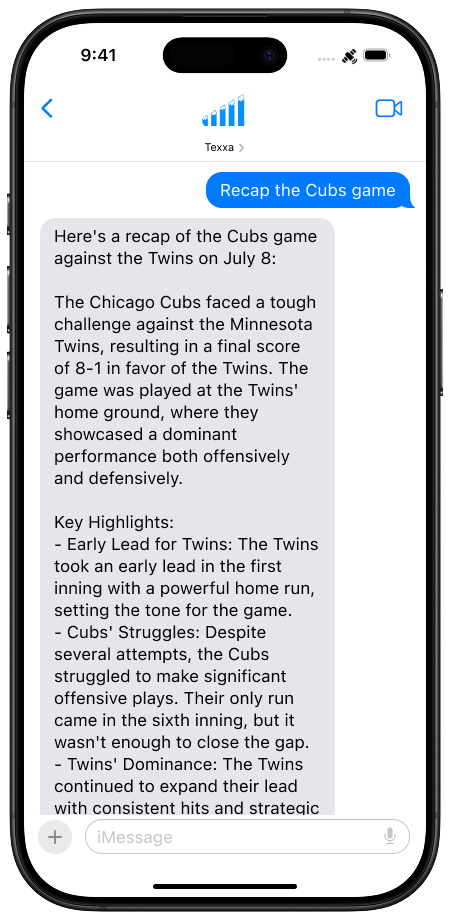

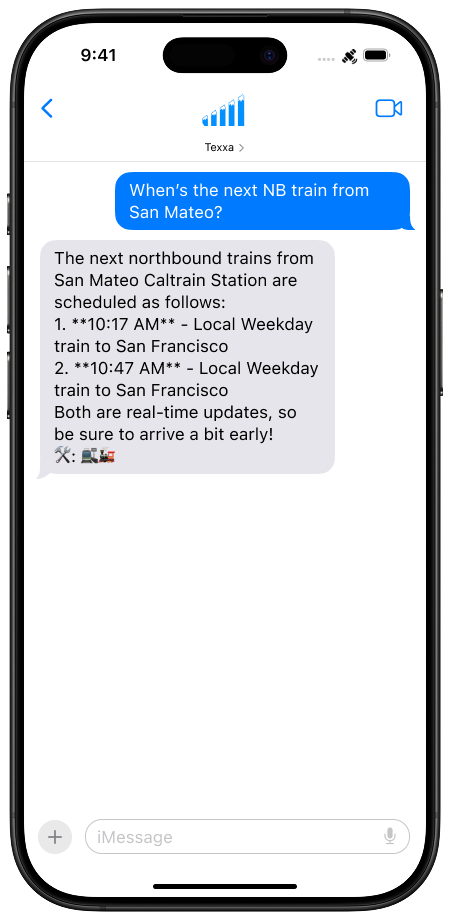

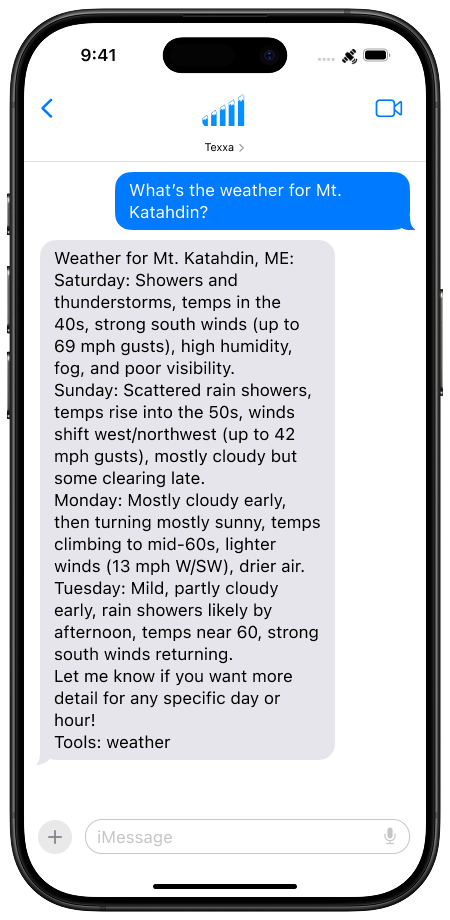

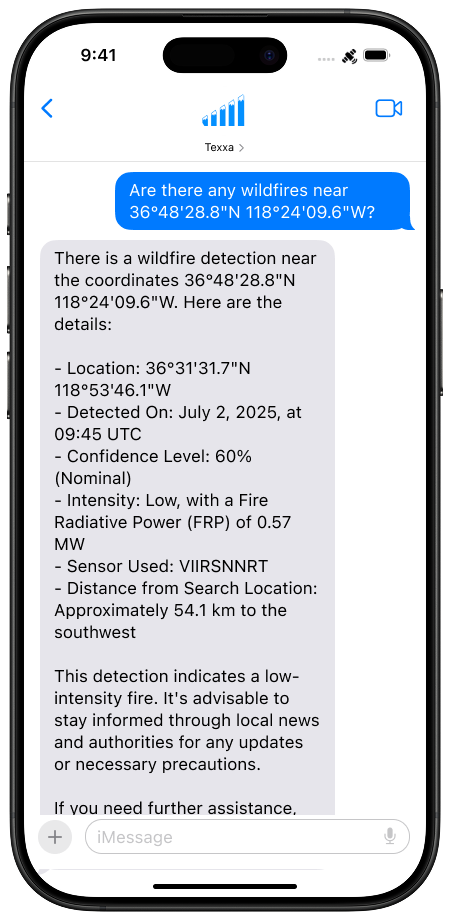

I’m proud to introduce Texxa! You can text it from nearly anywhere, and it will scour the internet for information, distill it all into a concise answer, and then transmit back just that answer.

Because I love naming things, I call this agentic compression, and it now enables you do things that weren’t even possible before without a proper internet connection or (maybe) expensive satellite phone.

I created Texxa because it fills a legitimate need and is something I wanted. Once I demonstrated to myself that yeah, this thing works and is awesome, I’ve been working to turn Texxa into more of a proper service – it’s simply something that other people should have access to.

Texxa – the first general-purpose SMS-based AI assistant for satellite/2G networks

- Texxa brings AI to people without a reliable data connection, with reduced equipment requirements:

- Backcountry adventurers with a satellite connection on their phone or existing satellite devices

- People in remote areas or on boats, people with unreliable internet connections, astronauts (probably)

- There are nearly 1 billion feature phone users globally (particularly in emerging markets) who cannot install AI apps and often have only a 2G connection with no data

- No app, account, or internet needed.

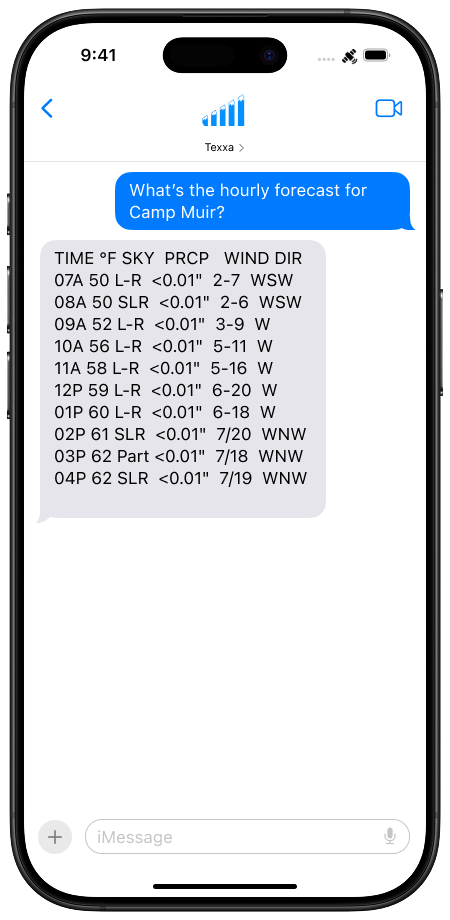

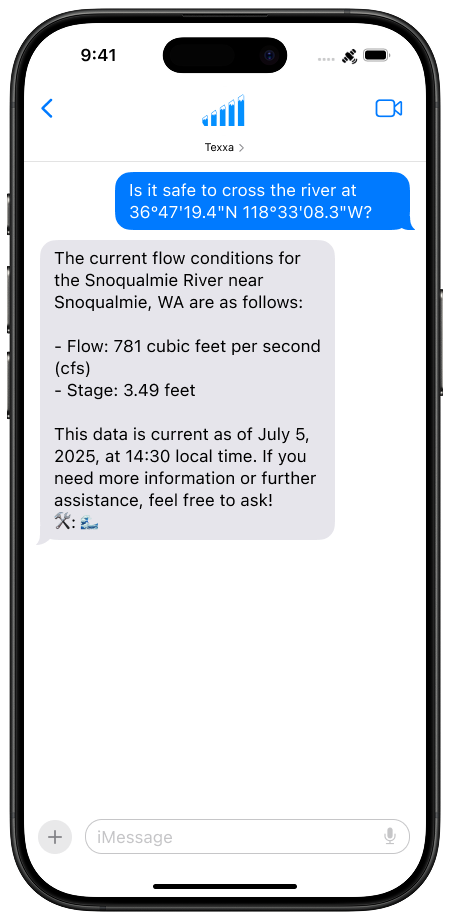

- Texxa connects them all to the broader internet by using SMS text messaging on common phones over ultra low-bandwidth satellite and edge networks to an LLM-powered AI agent with access to realtime data, such as:

Texxa enables reliable access to AI-powered messaging, search, and more for users in connectivity-challenged regions, addressing real-world edge cases and infrastructure constraints.

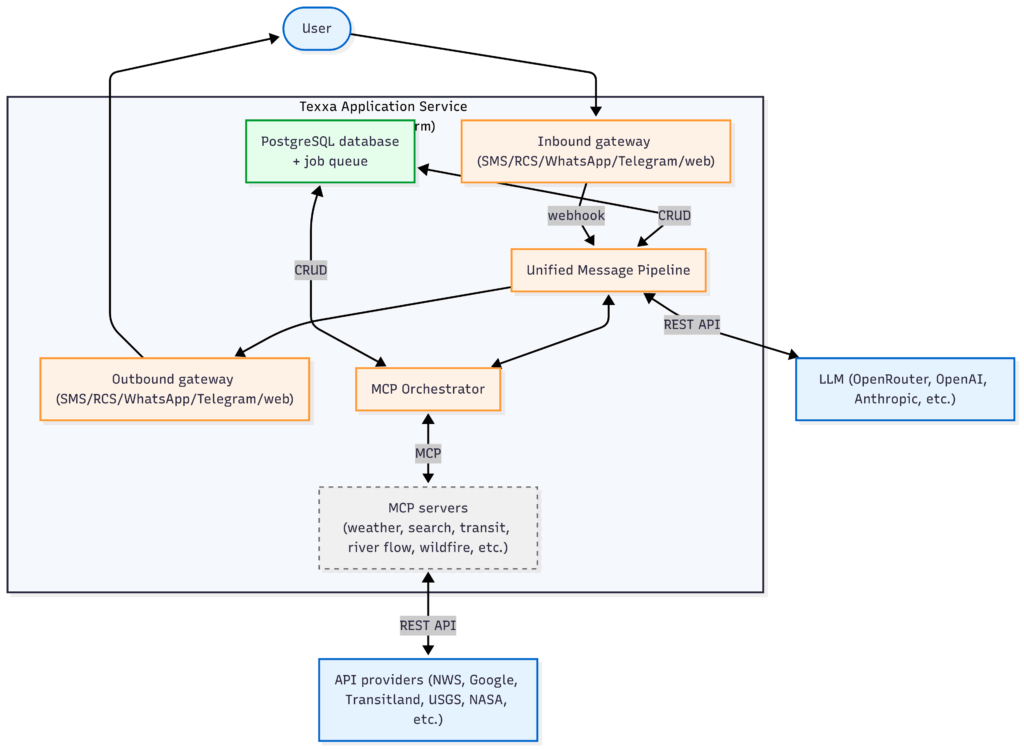

The Tech

I had the opportunity to learn SO many things, all made possible by the use of AI, whether learning about technologies, brainstorming use cases, architecting + coding + debugging a system, and so much more – all within the span of a few months. I’m not a professional software engineer, but I’ve loved diving into this space.

So, how does Texxa work? Read these sections if you want to get technical – skip to The Journey if you don’t!

The Journey

Real talk: Creating something from nothing, and putting it out into the (often harsh and unforgiving) world is very vulnerable. With Texxa, most people didn’t really get it (and still don’t), other people criticized it, but enough people did get it and loved it, to keep me going.

It would be great to get a report ahead of time for peaks down the trail so you can plan safe climbs…That’s an amazing tool to be able to make safety decisions. This is so clever!

– u/GraceInRVA804

Waterflow data is critical beta for whitewater rafting/kayaking. A difference of a few hundred CFS can make a significant difference for how hard different rapids are.

– u/PartTime_Crusader

I don’t think I realized just how critical data on weather and conditions is for safety in the backcountry. Improving access to information can literally prevent people from getting into life-or-death situations.

My realization: If you’re not getting 1 user per day telling you this is life-changing, you’re not pushing hard enough.

This period of experimenting with AI, software, startup life, and more over the past few months has lit me up in so many new ways. ❤️

And of course, this whole experience was the sum of countless, conversations, small and long… Thank you to these wonderful people for your support, inspiration, and reciprocal crazy ideas.

- Tabitha: for being contractually obligated to support me, your lawfully wedded husband 😘

- Justin: for validating every part of this experience (having lived it yourself), and for your exuberance for (I think) every single idea I had

- Mike: for believing in me without hesitation to keep pulling the thread on this AI thing and see where it took me

- Aaron and Everan: for our meandering philosophical conversations and for wholeheartedly engaging with my usually less-than half-baked prototypes

- Gabor and Russell: for waxing with me about the impact and applications of AI

- Liesel, Min, and David: for your amazing encouragement and optimism, often when I needed it most