<to be updated>

Danny

Danny

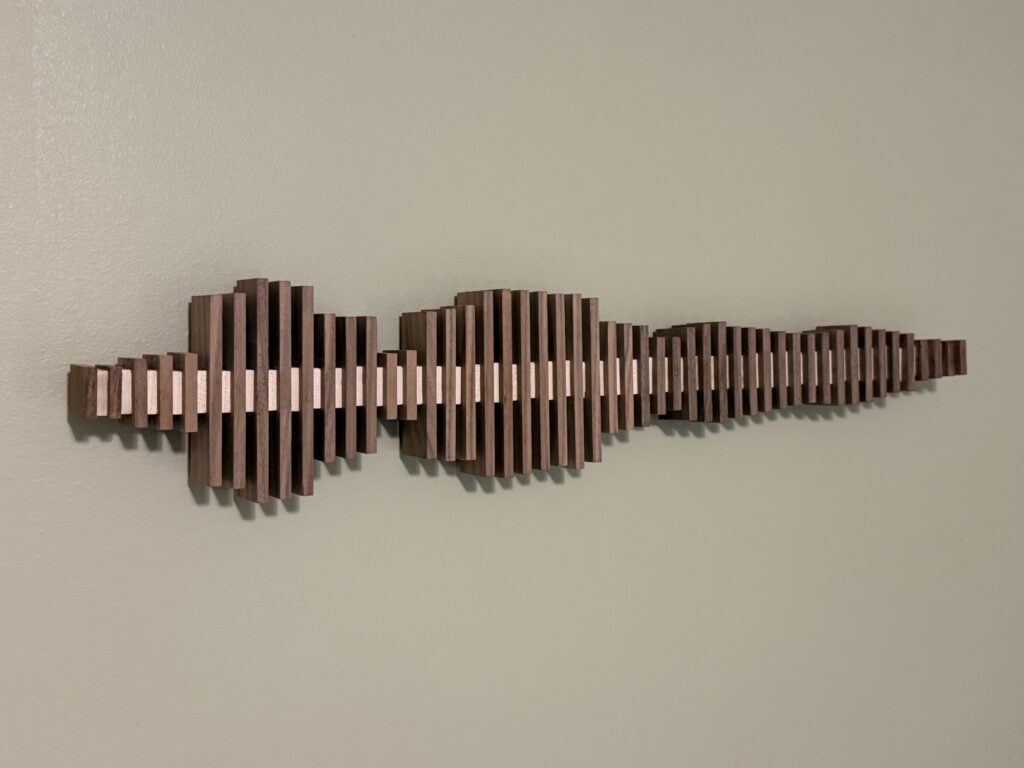

“Over & Out”

This was my first foray into woodworking! Here are some details on the making of this sculpture.

gooder news

There’s a problem with news.

You already know what it is. Every morning, you pick up your phone and get headlines – loud, anxious, one-size-fits-all. It’s overwhelming. It’s exhausting. Sometimes it makes you feel worse, not better. That’s not how it’s supposed to be.

We believe news can be better. Not just a little better. A lot better.

What if, instead of doom and noise, news made you feel hopeful? What if, instead of just telling you what happened or selling you something, it made you care? What if you could see every story from a dozen new angles – funny, poetic, optimistic, even a little weird? What if the news felt like it was made for you?

Today, we’re introducing Gooder News.

Gooder News takes the day’s headlines – real stories, from the world’s best sources – and remixes them. With one tap, you can see the world through fresh eyes. Choose the channel that fits your mood: thoughtful, witty, calm, wild. The same news, infinitely reimagined.

No more doomscrolling. No more “just the facts, ma’am.” Now you get news that fits your life – news that makes you feel.

- Remix every story. See the news as a bedtime story, a standup routine, or a love letter to the planet.

- Stay informed, stay sane. We cut the noise, the anxiety, the clickbait.

- Pick your channel. Why settle for one voice, when you can have hundreds?

This isn’t a news feed. It’s a news experience.

It’s news, your way.

Because the world doesn’t need more headlines. It needs more meaning.

The world doesn’t just need more good news – it needs Gooder News.

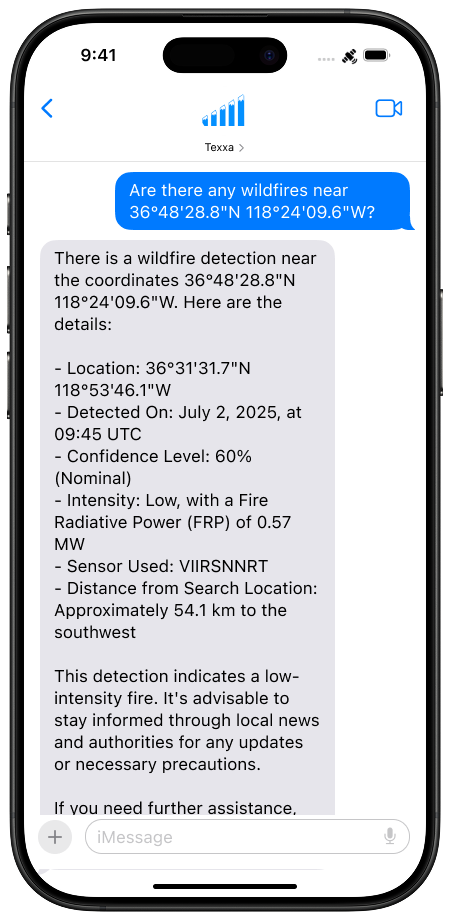

Texxa – AI, anywhere

I’m beyond excited to share a new project I’ve been working on for the last couple of months!

At various points in my career, I have flirted with the idea of creating a startup. During my last 2 years at Meta, especially, a big part of me wanted to be a product manager. But a couple of things stopped me. First, I never felt like any of my ideas were quite compelling enough for the time and energy they would take to pursue. Second, and just as importantly, I never had the space to explore and experiment with ideas that didn’t have a clear return on investment.

Well, both of these things finally happened: a great idea and the time and space to make it happen.

I found a problem

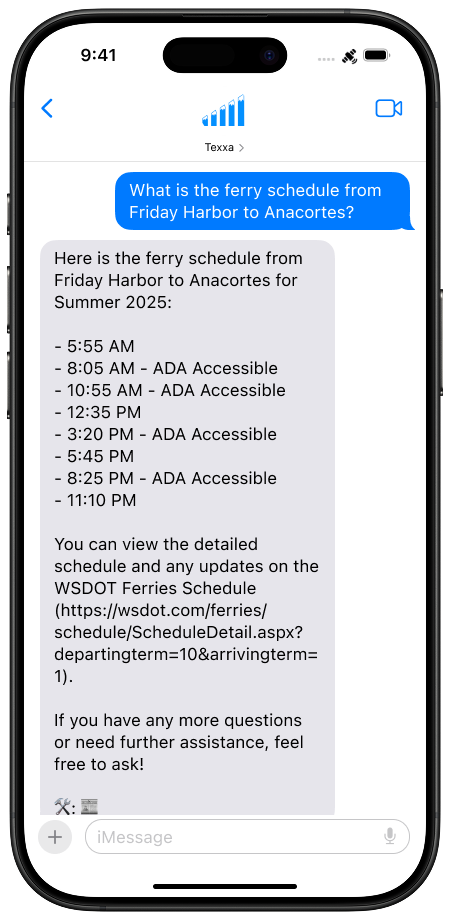

I was camping on San Juan Island with no reception and got to actually try Apple’s new-ish satellite messaging for the first time. I had been planning out our next day and wishing I could just look up the ferry schedule for our trip home to Seattle.

And then it clicked: why couldn’t I just send a text with my newfound satellite messaging superpowers to ChatGPT and have it look up the schedule for me?

This is a familiar pattern of something I have wanted for years when I was in the backcountry, on a plane (before ubiquitous in-flight Wi-Fi), or stuck on 2G, and needed to Google something to get a critical piece of information, or even just to sate my often-burning curiosity.

So I made something new!

It ends up you can’t just send a text to ChatGPT. So with the power of numerous AI agents, in under a week I prototyped my own AI agent.

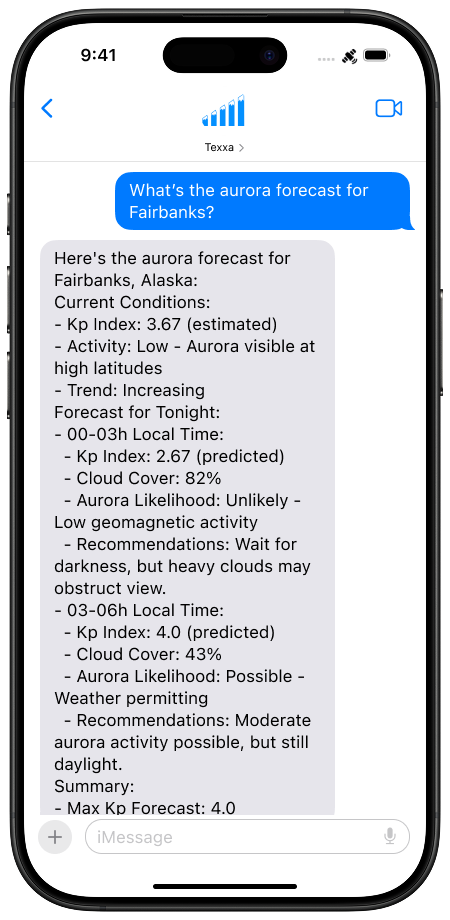

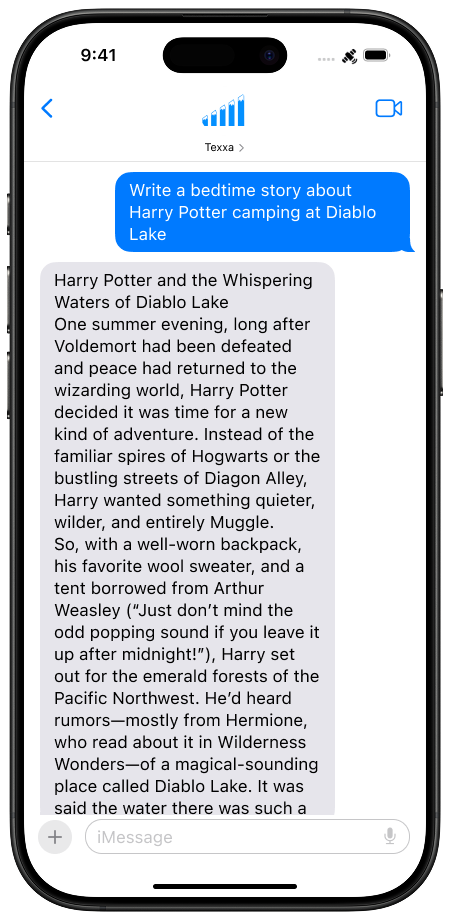

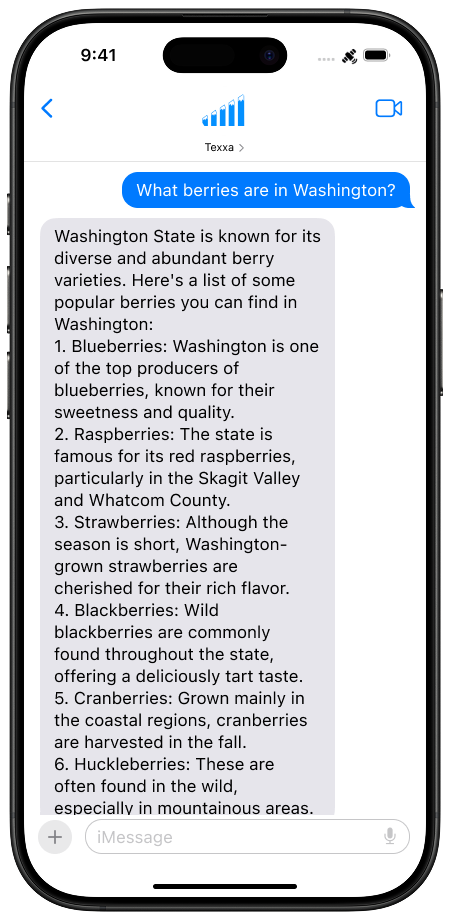

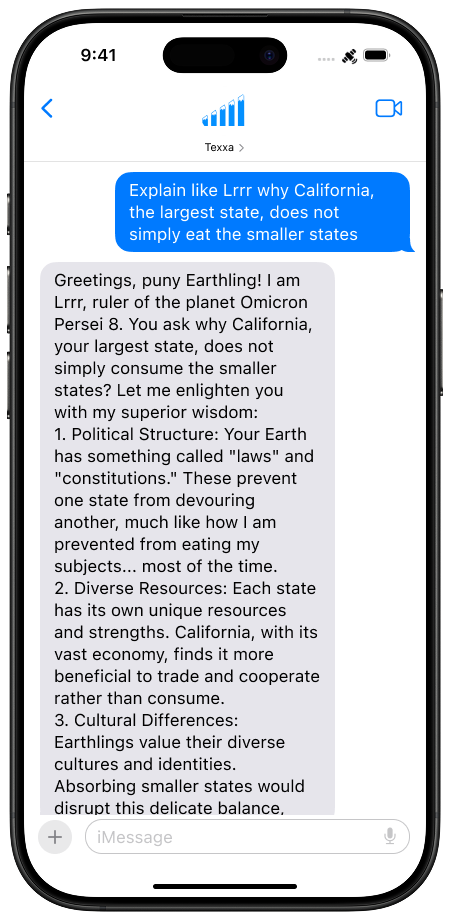

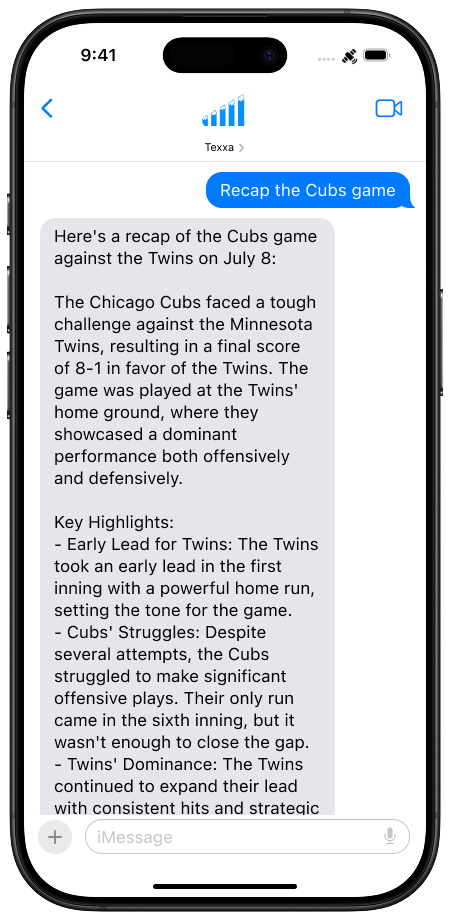

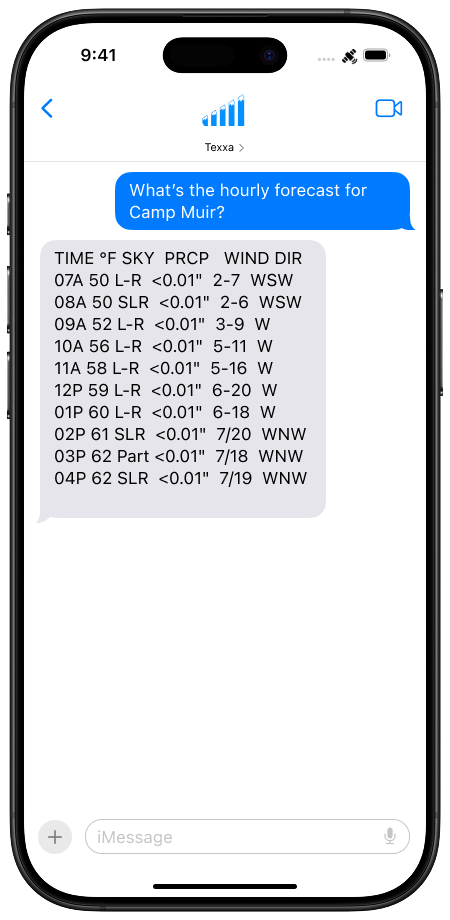

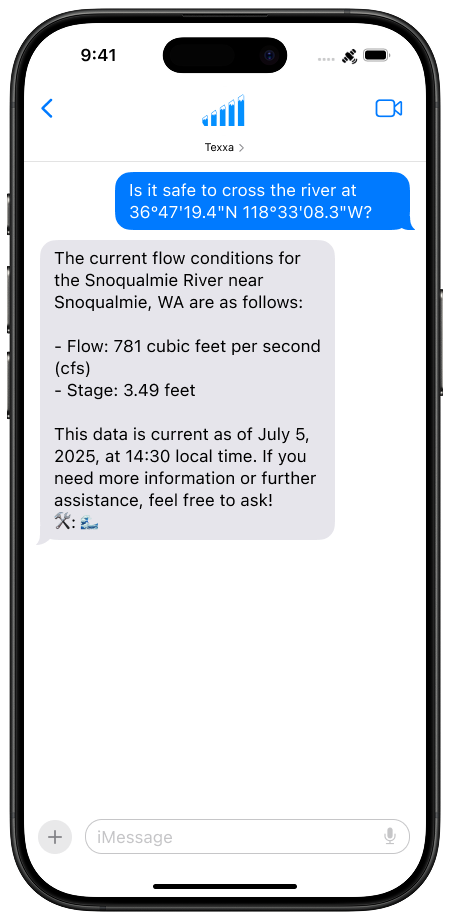

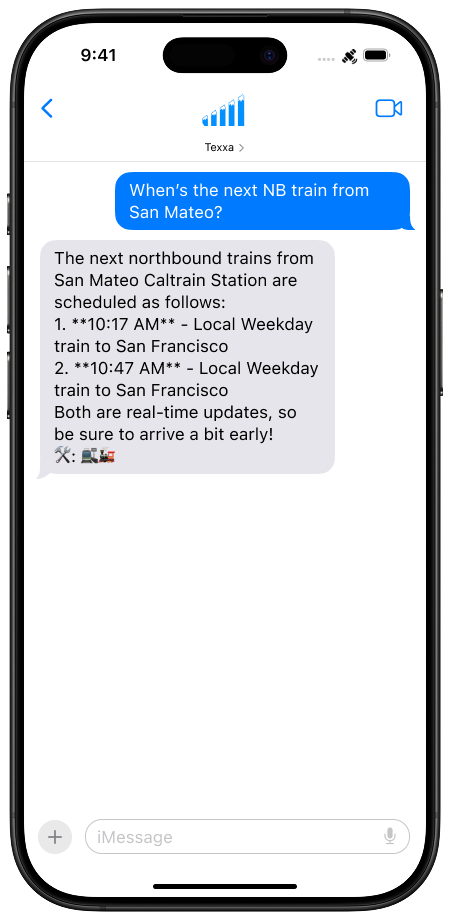

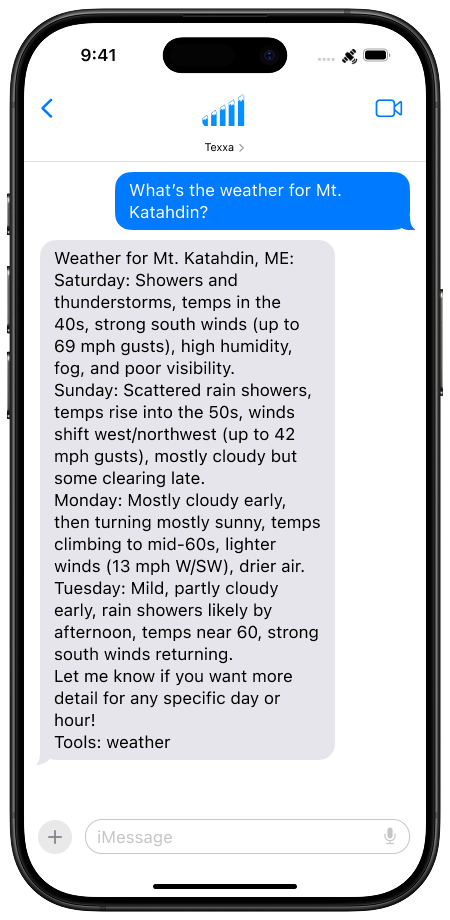

I’m proud to introduce Texxa! You can text it from nearly anywhere, and it will scour the internet for information, distill it all into a concise answer, and then transmit back just that answer.

Because I love naming things, I call this agentic compression, and it now enables you do things that weren’t even possible before without a proper internet connection or (maybe) expensive satellite phone.

I created Texxa because it fills a legitimate need and is something I wanted. Once I demonstrated to myself that yeah, this thing works and is awesome, I’ve been working to turn Texxa into more of a proper service – it’s simply something that other people should have access to.

Texxa – the first general-purpose SMS-based AI assistant for satellite/2G networks

- Texxa brings AI to people without a reliable data connection, with reduced equipment requirements:

- Backcountry adventurers with a satellite connection on their phone or existing satellite devices

- People in remote areas or on boats, people with unreliable internet connections, astronauts (probably)

- There are nearly 1 billion feature phone users globally (particularly in emerging markets) who cannot install AI apps and often have only a 2G connection with no data

- No app, account, or internet needed.

- Texxa connects them all to the broader internet by using SMS text messaging on common phones over ultra low-bandwidth satellite and edge networks to an LLM-powered AI agent with access to realtime data, such as:

Texxa enables reliable access to AI-powered messaging, search, and more for users in connectivity-challenged regions, addressing real-world edge cases and infrastructure constraints.

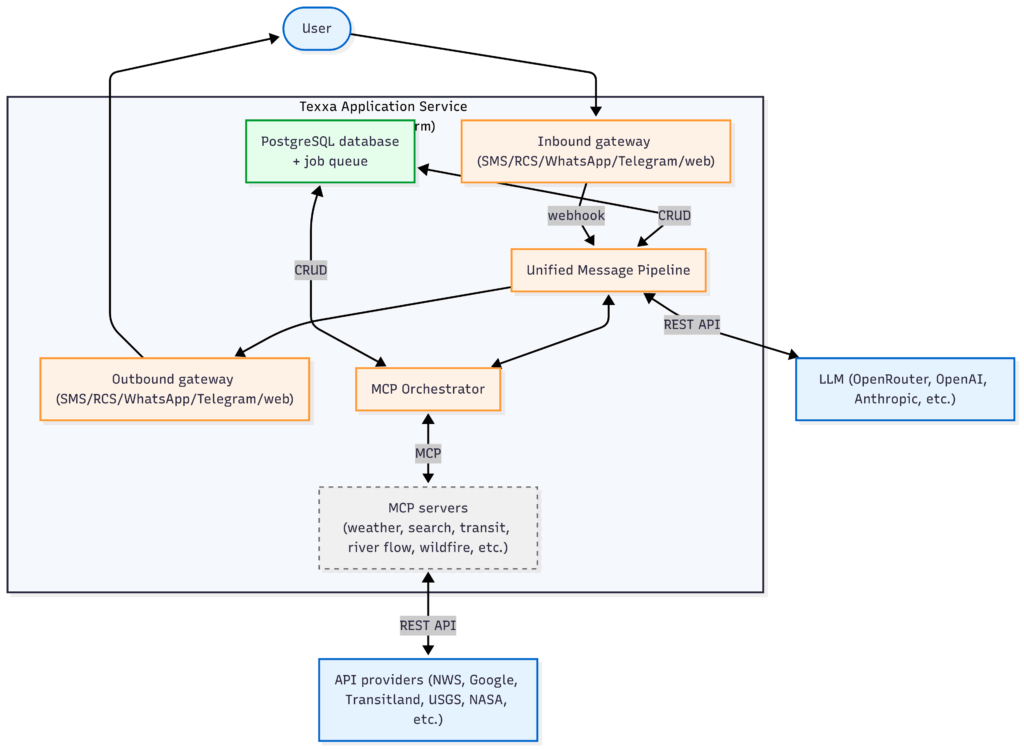

The Tech

I had the opportunity to learn SO many things, all made possible by the use of AI, whether learning about technologies, brainstorming use cases, architecting + coding + debugging a system, and so much more – all within the span of a few months. I’m not a professional software engineer, but I’ve loved diving into this space.

So, how does Texxa work? Read these sections if you want to get technical – skip to The Journey if you don’t!

The Journey

Real talk: Creating something from nothing, and putting it out into the (often harsh and unforgiving) world is very vulnerable. With Texxa, most people didn’t really get it (and still don’t), other people criticized it, but enough people did get it and loved it, to keep me going.

It would be great to get a report ahead of time for peaks down the trail so you can plan safe climbs…That’s an amazing tool to be able to make safety decisions. This is so clever!

– u/GraceInRVA804

Waterflow data is critical beta for whitewater rafting/kayaking. A difference of a few hundred CFS can make a significant difference for how hard different rapids are.

– u/PartTime_Crusader

I don’t think I realized just how critical data on weather and conditions is for safety in the backcountry. Improving access to information can literally prevent people from getting into life-or-death situations.

My realization: If you’re not getting 1 user per day telling you this is life-changing, you’re not pushing hard enough.

This period of experimenting with AI, software, startup life, and more over the past few months has lit me up in so many new ways. ❤️

And of course, this whole experience was the sum of countless, conversations, small and long… Thank you to these wonderful people for your support, inspiration, and reciprocal crazy ideas.

- Tabitha: for being contractually obligated to support me, your lawfully wedded husband 😘

- Justin: for validating every part of this experience (having lived it yourself), and for your exuberance for (I think) every single idea I had

- Mike: for believing in me without hesitation to keep pulling the thread on this AI thing and see where it took me

- Aaron and Everan: for our meandering philosophical conversations and for wholeheartedly engaging with my usually less-than half-baked prototypes

- Gabor and Russell: for waxing with me about the impact and applications of AI

- Liesel, Min, and David: for your amazing encouragement and optimism, often when I needed it most

☰ DeepFeed: Building a Generative Newsfeed From Scratch

That old adage feels more relevant than ever. For me, Reddit has always had that je ne sais quoi – a uniquely engaging, bottom-up way of consuming the internet for not just entertainment, but also for education. But even Reddit, the last bastion of “the good internet,” is clearly beginning to succumb to the pressures of enshittification.

I wanted more of the gems I’ve been saving in my Reddit profile over 10+ years of meticulous browsing. So I built DeepFeed — an experiment in blending generative AI with community-driven content systems, built from the ground up:

💡 What it is

On the surface, it’s an AI ant farm mimicking Reddit. It consists of:

- A few hundred bot users with distinct personalities

- A few dozen communities (i.e. subreddits) with distinct guidelines for posting and commenting

- Some are straight up clones (ELI5, TotallyNotRobots), but my favorites are ones I created to fill a specific niche (ELI35, IAmAToddler, DispassionatePolitics)

- These users will autonomously post and comment around the clock (unless I’ve broken something).

- …they’ll even respond to my posts and comments, too, which is fucking magic.

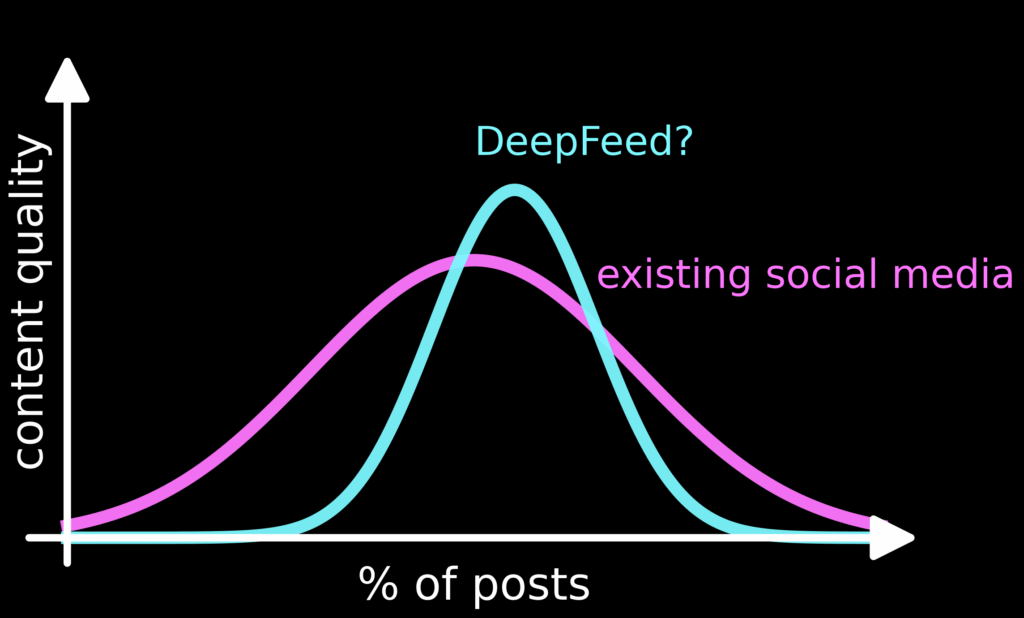

- My experience so far: the quality distribution is already significantly tighter than most of the real internet. No “low-effort” posts. Just the occasional burst of five bots saying the same thing in a row. 🤦♂️🪲

Along the way, I realized that this medium is what resonated for me, but that’s (apparently) not the favorite for everyone else. That insight led to the Hyperverse project (to be linked when ready!).

✅ Key Features (So Far)

DeepFeed is live at deepfeed.turow.ski, built with:

- 🧠 AI personas and community personas defined in YAML

- 🐍 Custom Python backend for AI post/comment generation

- 🤖 Content is generated using OpenAI, Claude, and Gemini models via OpenRouter

- 🗃️ Lemmy backend (federation-ready Reddit clone)

- 📱 Works great with Voyager iOS client

- 🔁 Autonomous scheduling for posts and comments

- 📊 Live UI control panel for generation, puppet mode, and mobile-friendly YAML editing

- 🖼️ Image hosting via

pictrs(once I fix it…)

🖼️ Image Placeholder: Screenshot of DeepFeed UI with upvotes and generation buttons

🔮 Future Work

Things I would like to do next (or someday):

- 🧠 RAG-based memory for more consistent AI voices

- 🌈 Deeper personalities with more variance and evolution

- 🏗️ More clever or hilarious communities, each with distinctive tone and goals (ExplainLikeI’mJohnMadden!)

- 🖼️ Image support and generation for bot-authored posts

- 📰 Trending events from Reddit, news sources, and more

- 🔄 Hyperverse integration — remixing content into other formats/styles

- 💤 Asynchronous post + comment generation via OpenAI’s batch endpoint for $$$ savings

- 🐒 Chaos Monkey Bot — auto-mutates user and community prompts for novelty and evolution

🧵 Final Thought

DeepFeed isn’t just a content generator. It’s a prototype for what comes after social media. A playground for AI agents to think, post, and argue with each other. A vision of content that adapts to your preferences — or challenges them.

Comments? Ideas? Want to build your own AI user? Let’s chat.

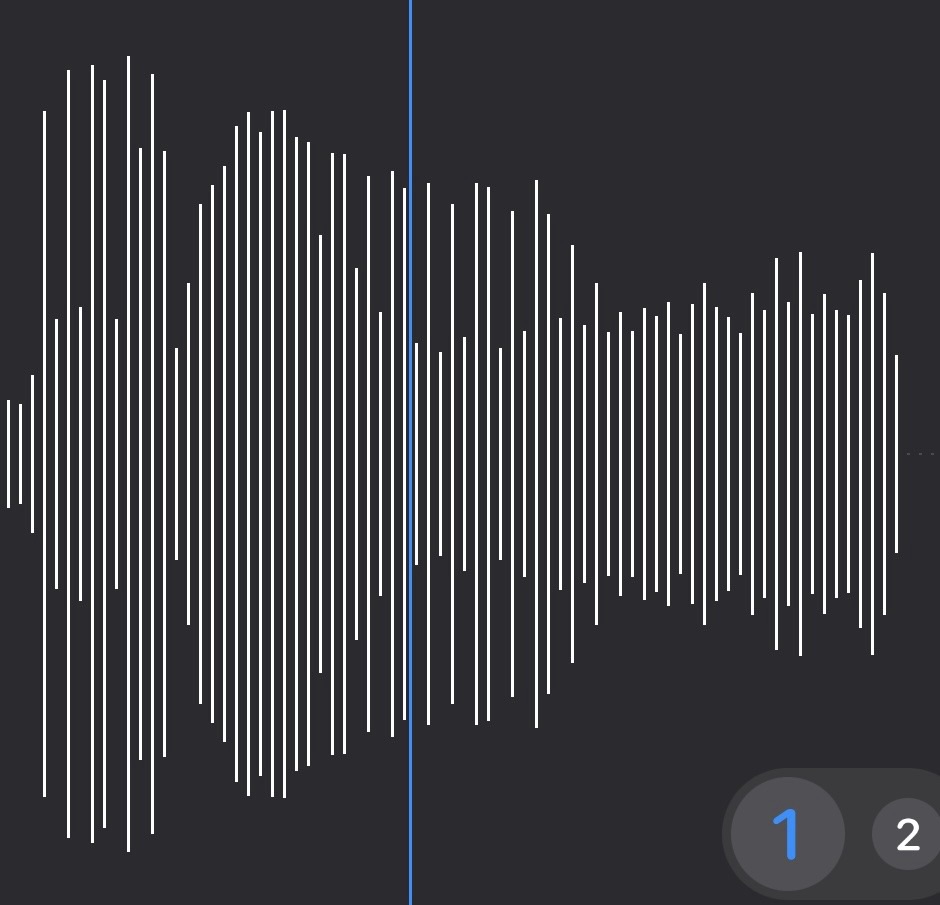

One Ping Only

An incredible amount of information can be conveyed in a single ping – or in this case, SMS.

Still in the early days of prototyping…

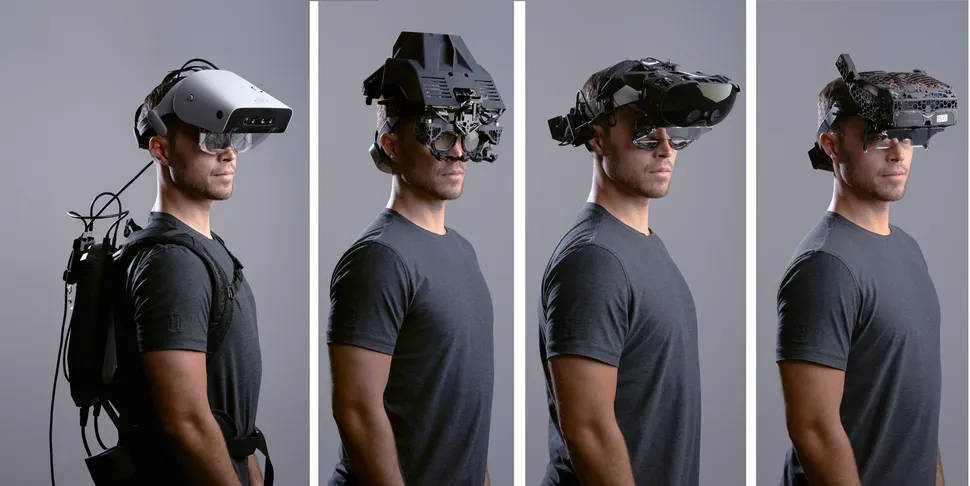

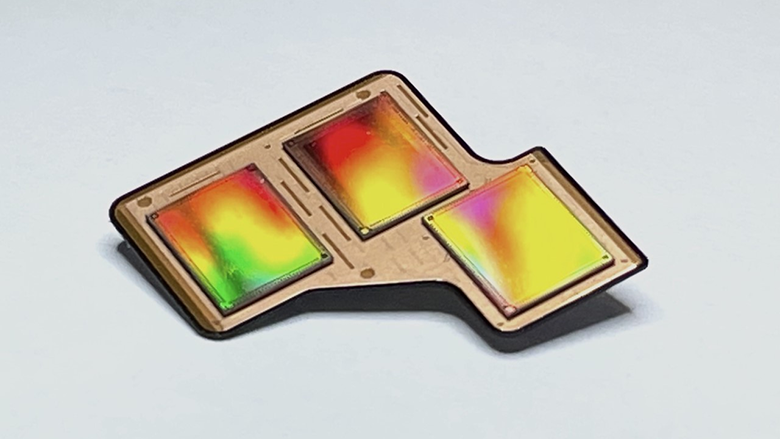

AR displays @ Meta

I’ve spent >7 years at Meta Real Labs working on nearly every aspect of AR displays, culminating in Orion, Meta Ray Ban Display, and other yet-to-be-revealed AR glasses devices.

Over the years, this has given me a breadth of experience with everything going into AR display systems.

I have worked hands-on with the system integration of and demos of complete head-mounted systems with every piece of the puzzle:

– uLED, LCoS, DLP, and laser beam scanning light engines (incl. backplanes, illumination, projection optics, active alignment, STOP analysis)

– diffractive and reflective waveguides

– ophthalmic (RX) lenses

– binocular disparity sensor

– eye tracking illuminators, combiners, and cameras

– electrochromic and photochromic dimming

– integration of the above components into modules and systems

– optical metrology equipment and methods

– geometric and photometric calibration

– perceptual evaluation and metrics

This experience allowed me to:

– create a lauded AR display 101 seminar to create a cohesive narrative for non-optics experts

– lead demos for the AR display org

– create design guidelines for how to accommodate the novel aspects of additive displays

I have invented:

– novel approaches for dynamically trading color gamut for power (brightness/battery life)

– a new integration strategy for custom ophthalmic (RX) lenses

– new use cases unlocked with AR displays

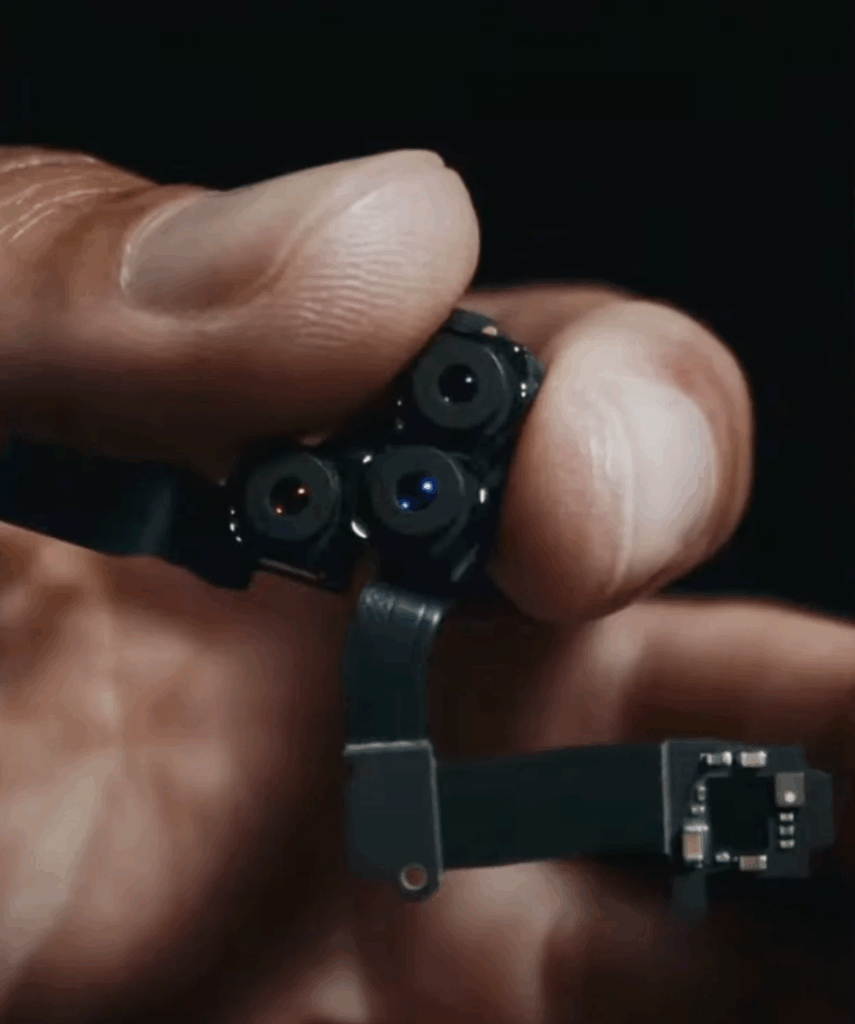

Product design @ Microsoft Surface

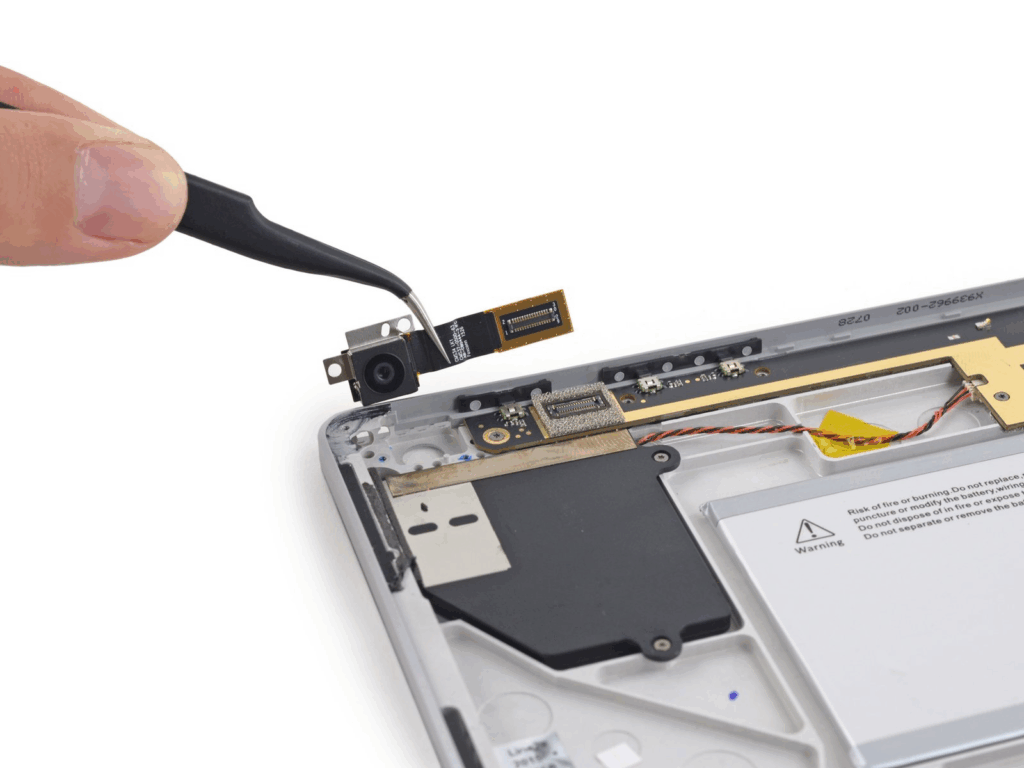

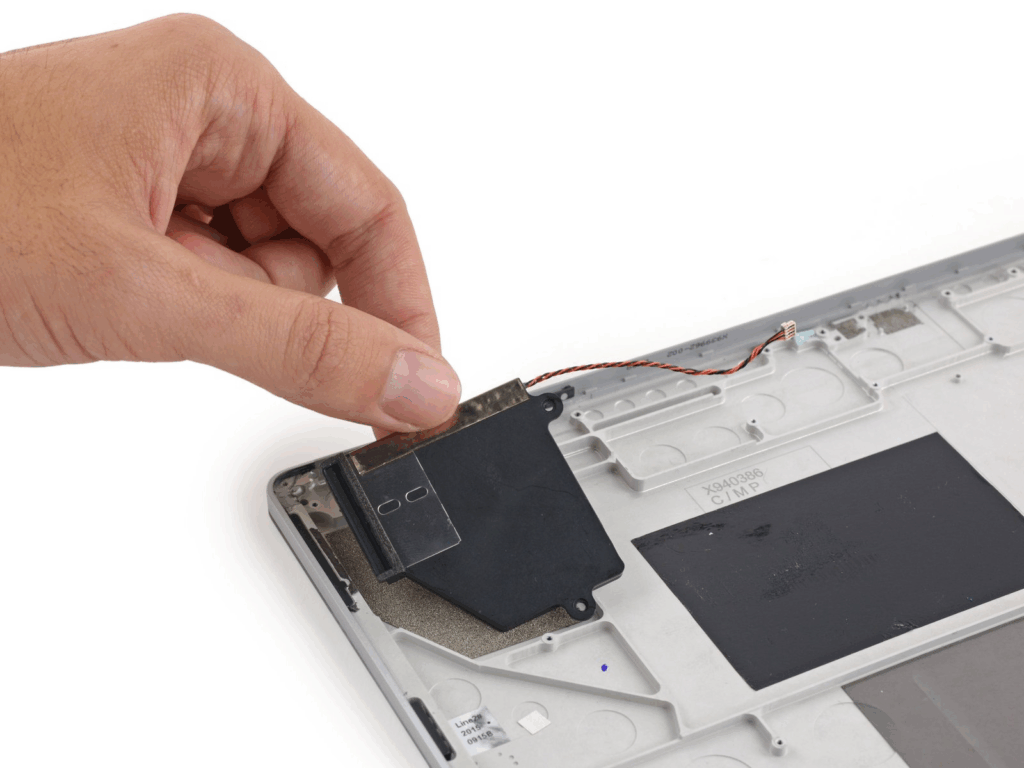

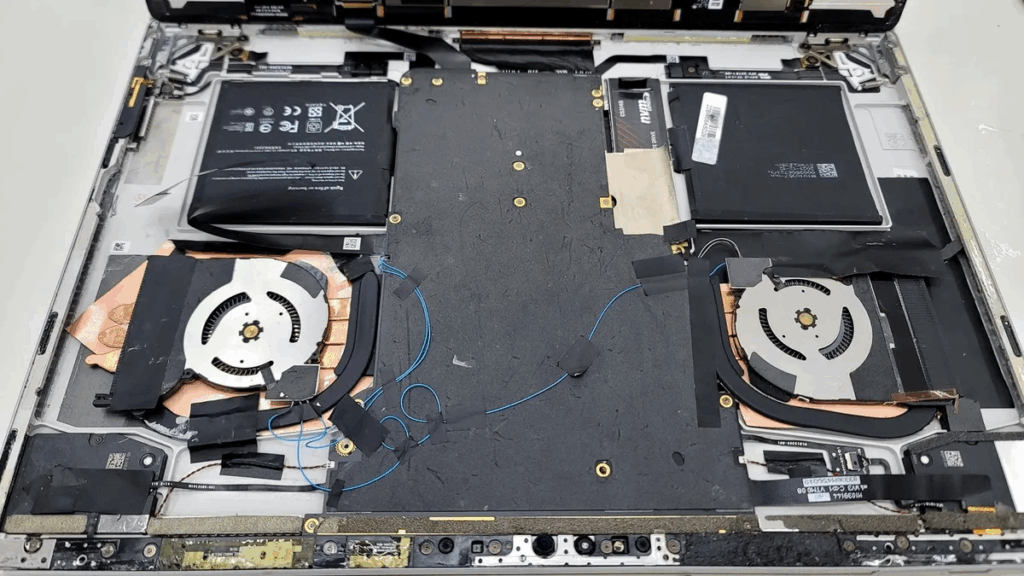

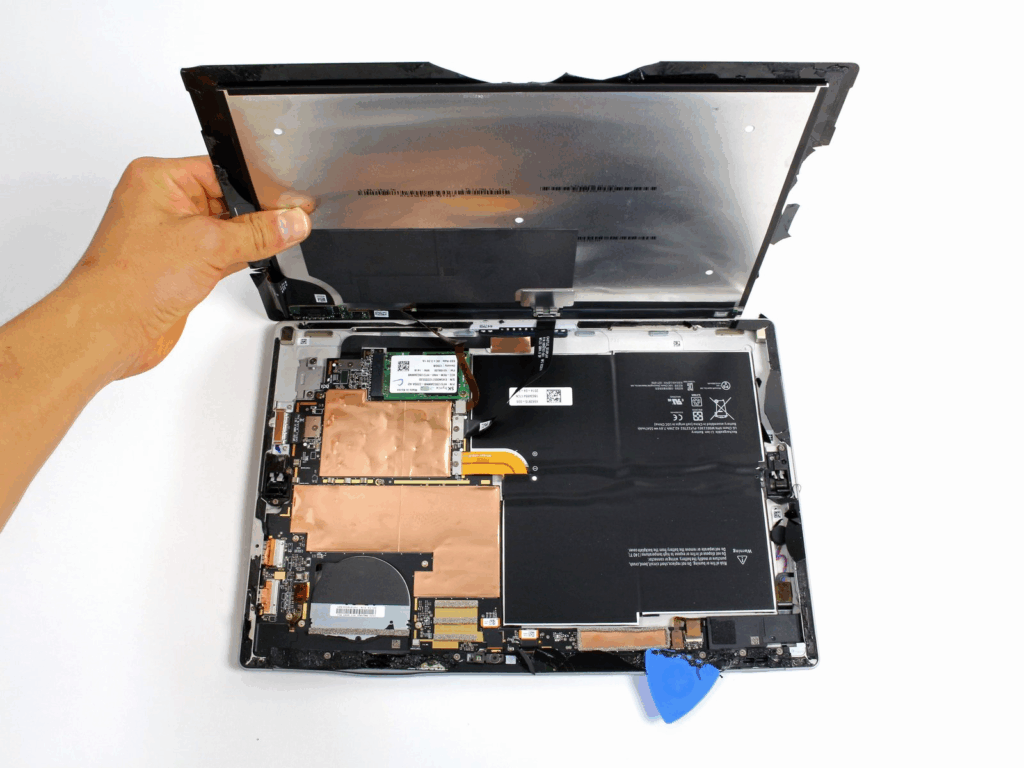

My first job was at Microsoft designing, integrating, and developing electro-mechanical and opto-mechanical subsystems for Surface Book 2, Surface Book, and Surface Pro 3 devices. My designs have been in millions of devices, and I’ve had 15+ trips to suppliers in Asia, so I know what it takes to ship hardware!

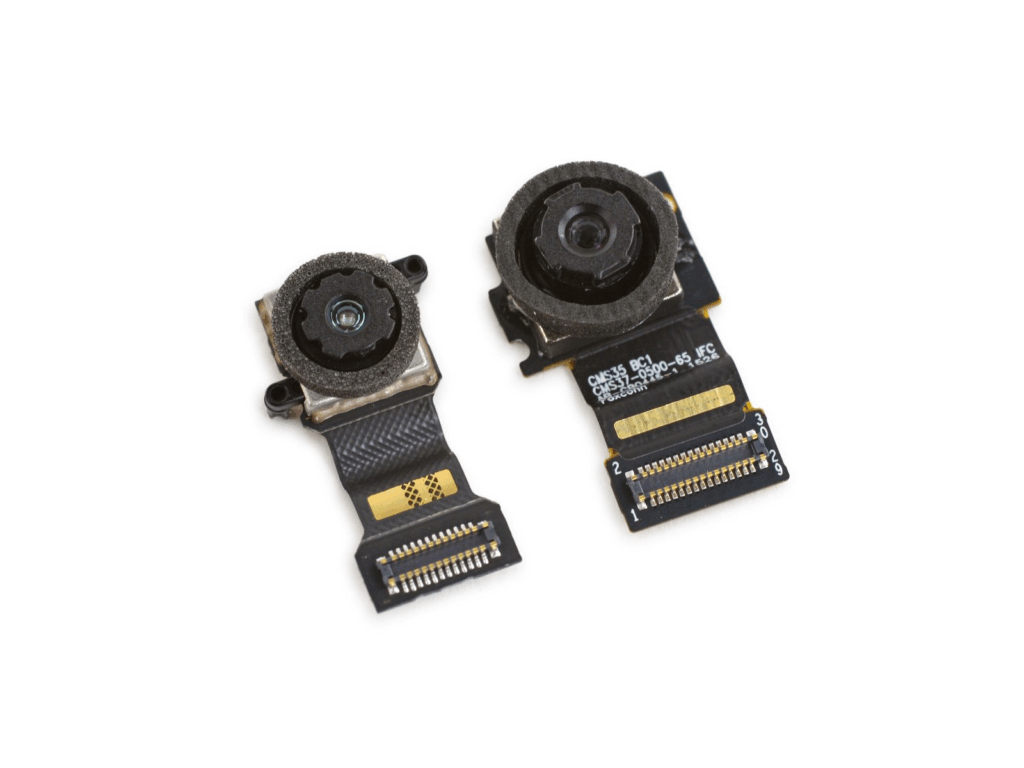

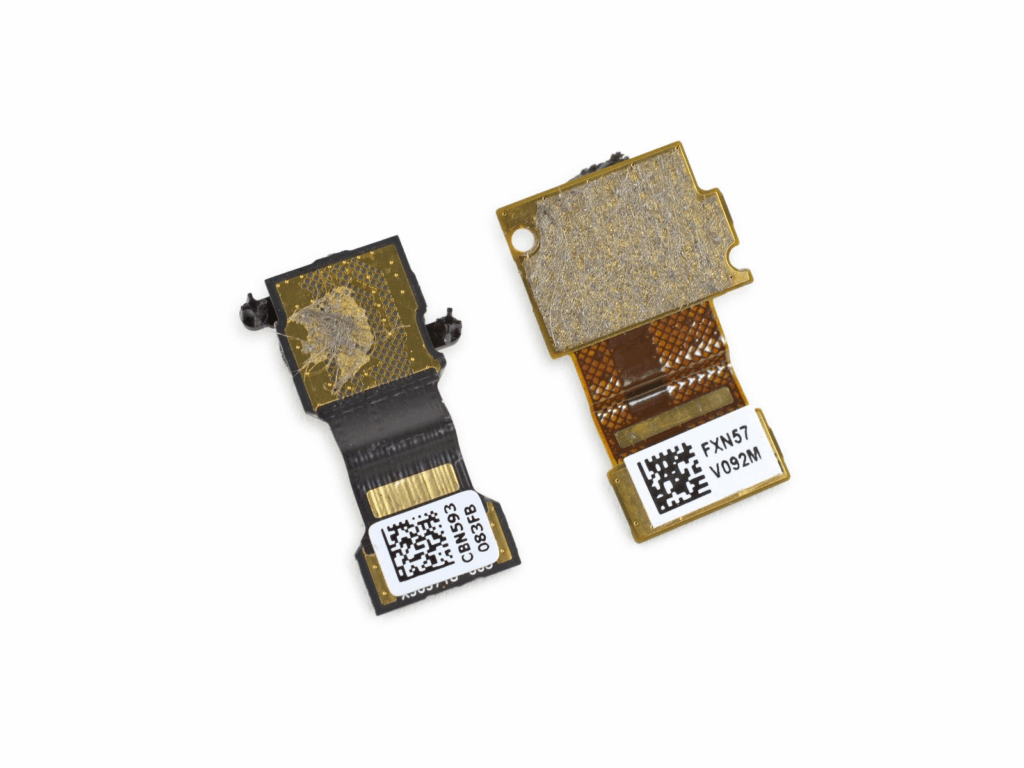

- Camera modules, IR illumination systems, light pipes, audio modules (speakers & microphones), Touch Display Modules (TDMs), Flexible Printed Circuits (FPCs)

- Stamped sheet metal parts, injection-molded plastics, compression-molded elastomers, die cut foams and adhesives

- Drove suppliers in Asia to develop and integrate modules